Once you have successfully trained a neural network, the next question becomes: how do you embed it into your C++ application?! Perhaps you've already looked into using Darknet's legacy C API, functions like load_network_custom(), do_nms_sort(), and get_network_boxes(). That API is not easy to work with, and there isn't much documentation nor example code.

(In case it helps, I did put together a blog post with a few details in late August 2019: https://www.ccoderun.ca/programming/2019-08-25_Darknet_C_CPP/.)

DarkHelp lets you skip those C function calls, and simplifies things with an extremely simple-to-use C++ API! The DarkHelp C++ library is available on Linux and Windows.

You load the neural network and the weight files, then call DarkHelp::NN::predict() once per image you'd like analyzed. Each time you get back a new std::vector of predictions.

Since annotating pictures is something that many applications want – especially during debugging – DarkHelp::NN::annotate() is provided to easily mark up images with the detection results. To ease integrating this into larger projects, DarkHelp uses OpenCV's standard cv::Mat images, not Darknet's internal image structure. This is an example of what DarkHelp::NN::annotate() can do with an image and a neural network that detects barcodes:

If you're looking for some sample code to get started, this example loads a network and then loops through several image files:

The predictions are stored in a std::vector of structures. (See DarkHelp::PredictionResults.) You can get this vector and iterate through the results like this:

If you have multiple classes defined in your network, then you may want to look at DarkHelp::PredictionResult::all_probabilities, not only DarkHelp::PredictionResult::best_class and DarkHelp::PredictionResult::best_probability.

The following is the shortest/simplest self-contained example showing how to load a network, run it against a set of images provided on the command-line, and then output the results as a series of coordinates, names, etc:

Example output from sending the "results" to std::cout like the code in the previous block:

If you call DarkHelp::NN::annotate() to get back a OpenCV cv::Mat object, you can then display the image with all the annotations, or easily save it as a jpg or png. For example:

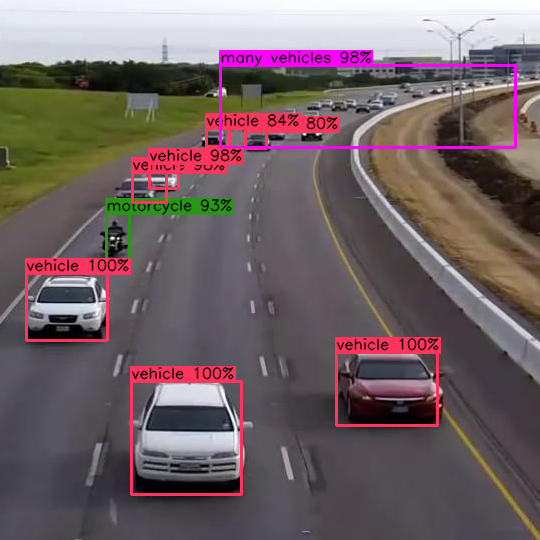

The example call to cv::imwrite() in the previous example might give something similar to this image:

Note that DarkHelp uses OpenCV internally, regardless of whether or not the client code calls DarkHelp::NN::annotate(). This means when you link against the libdarkhelp library you'll also need to link against OpenCV.