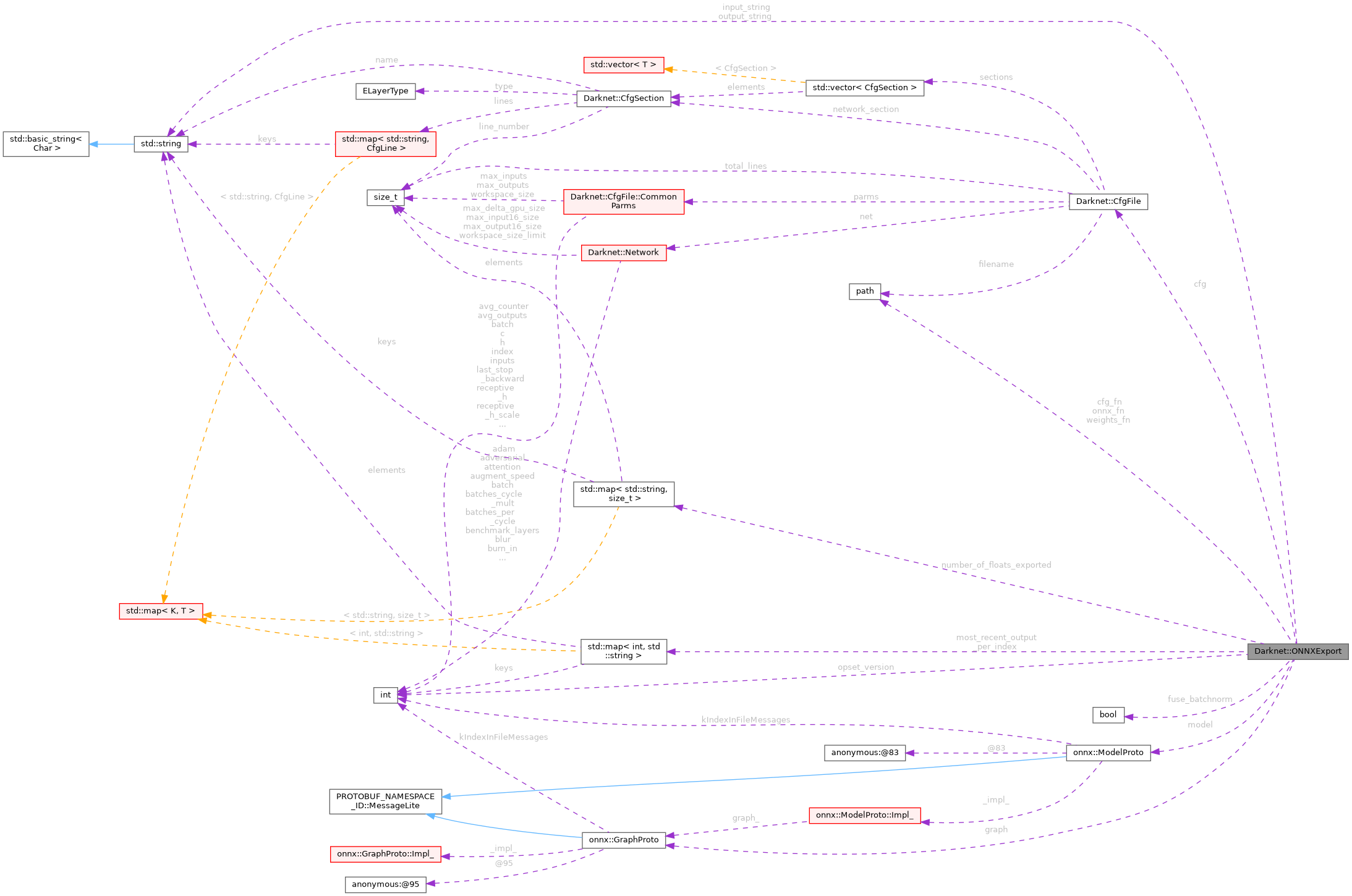

Everthing we need to convert .cfg and .weights to .onnx is contained within this class. More...

#include "darknet_onnx.hpp"

Public Member Functions | |

| ONNXExport (const std::filesystem::path &cfg_filename, const std::filesystem::path &weights_filename, const std::filesystem::path &onnx_filename) | |

| Constructor. | |

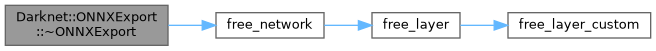

| ~ONNXExport () | |

| Destructor. | |

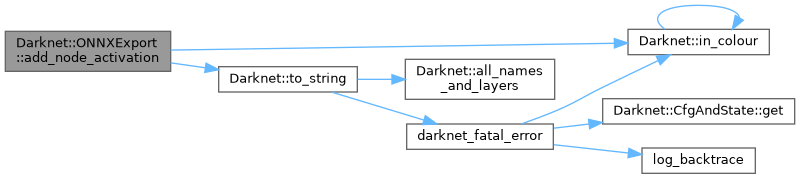

| ONNXExport & | add_node_activation (const size_t index, Darknet::CfgSection §ion) |

| ONNXExport & | add_node_bn (const size_t index, Darknet::CfgSection §ion) |

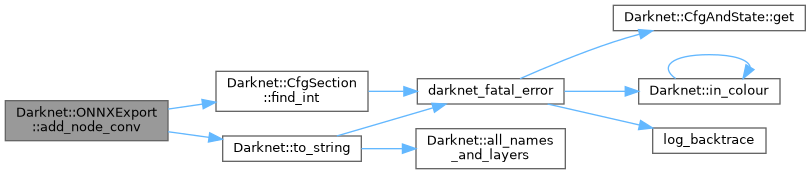

| ONNXExport & | add_node_conv (const size_t index, Darknet::CfgSection §ion) |

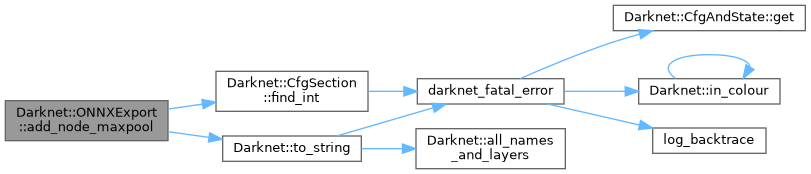

| ONNXExport & | add_node_maxpool (const size_t index, Darknet::CfgSection §ion) |

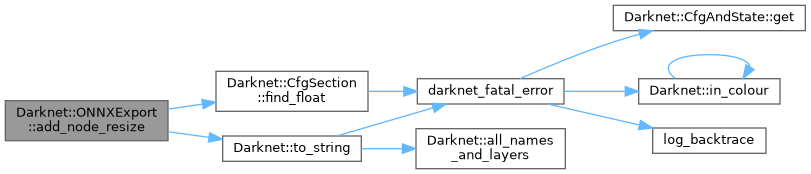

| ONNXExport & | add_node_resize (const size_t index, Darknet::CfgSection §ion) |

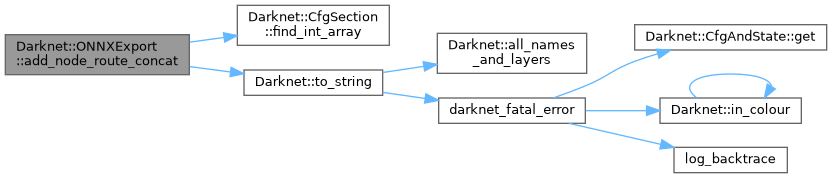

| ONNXExport & | add_node_route_concat (const size_t index, Darknet::CfgSection §ion) |

| ONNXExport & | add_node_route_split (const size_t index, Darknet::CfgSection §ion) |

| ONNXExport & | add_node_shortcut (const size_t index, Darknet::CfgSection §ion) |

| ONNXExport & | add_node_yolo (const size_t index, Darknet::CfgSection §ion) |

| ONNXExport & | build_model () |

| ONNXExport & | check_activation (const size_t index, Darknet::CfgSection §ion) |

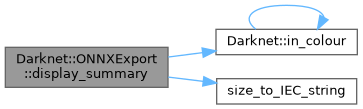

| ONNXExport & | display_summary () |

| Display some general information about the protocol buffer model. | |

| ONNXExport & | initialize_model () |

| Initialize some of the simple protobuffer model fields. | |

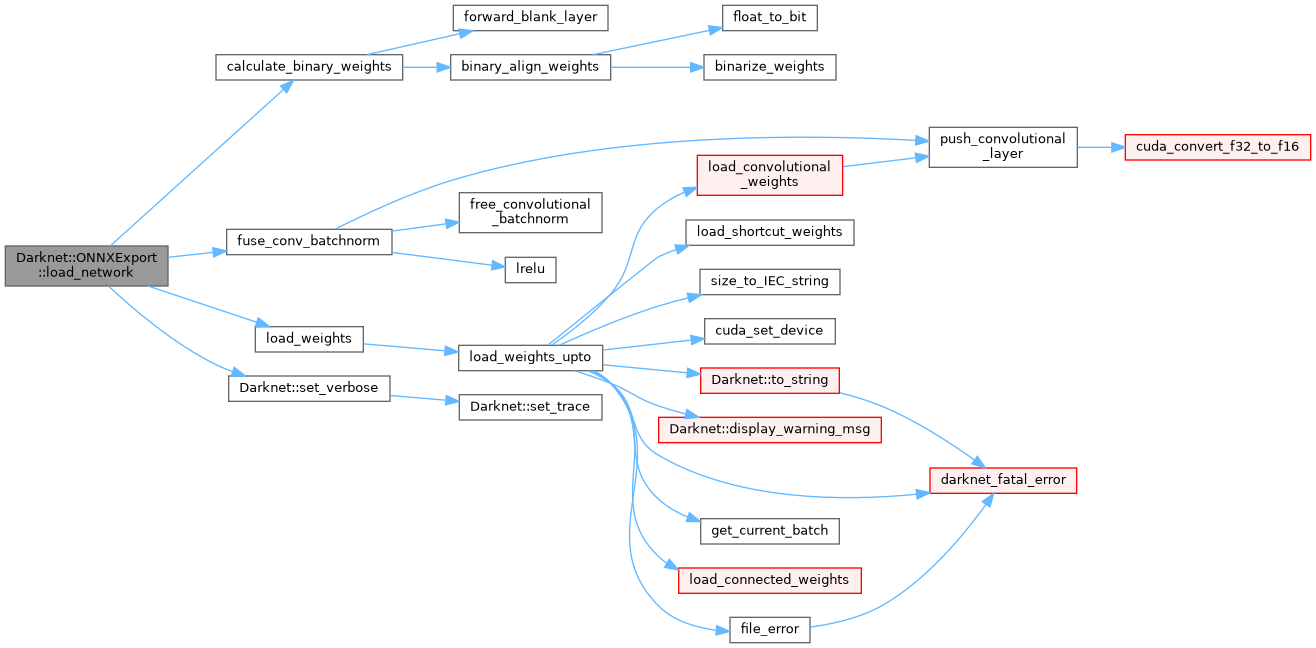

| ONNXExport & | load_network () |

| Use Darknet to load the neural network. | |

| ONNXExport & | populate_graph_initializer (const float *f, const size_t n, const size_t idx, const Darknet::Layer &l, const std::string &name, const bool simple=false) |

| ONNXExport & | populate_graph_input_frame () |

| ONNXExport & | populate_graph_nodes () |

| ONNXExport & | populate_graph_output () |

| ONNXExport & | populate_input_output_dimensions (onnx::ValueInfoProto *proto, const std::string &name, const int v1, const int v2=-1, const int v3=-1, const int v4=-1, const size_t line_number=0) |

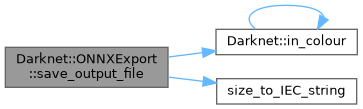

| ONNXExport & | save_output_file () |

| Save the entire model as an .onnx file. | |

Static Public Member Functions | |

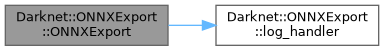

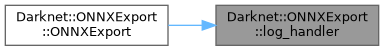

| static void | log_handler (google::protobuf::LogLevel level, const char *filename, int line, const std::string &message) |

| Callback function that Protocol Buffers calls to log messages. | |

Public Attributes | |

| Darknet::CfgFile | cfg |

| std::filesystem::path | cfg_fn |

| bool | fuse_batchnorm |

Whether or not we need to fuse batchnorm (fuse and dontfuse on the CLI). | |

| onnx::GraphProto * | graph |

| std::string | input_string |

| The dimensions used in populate_graph_input_frame(). | |

| onnx::ModelProto | model |

| std::map< int, std::string > | most_recent_output_per_index |

| Keep track of the single most recent output name for each of the layers. | |

| std::map< std::string, size_t > | number_of_floats_exported |

| The key is the last part of the string, and the value is the number of floats. | |

| std::filesystem::path | onnx_fn |

| int | opset_version |

| Which opset version to use (10, 18, ...)? | |

| std::string | output_string |

| The output nodes for this neural network. | |

| std::filesystem::path | weights_fn |

Everthing we need to convert .cfg and .weights to .onnx is contained within this class.

| Darknet::ONNXExport::ONNXExport | ( | const std::filesystem::path & | cfg_filename, |

| const std::filesystem::path & | weights_filename, | ||

| const std::filesystem::path & | onnx_filename | ||

| ) |

Constructor.

| Darknet::ONNXExport::~ONNXExport | ( | ) |

Destructor.

| Darknet::ONNXExport & Darknet::ONNXExport::add_node_activation | ( | const size_t | index, |

| Darknet::CfgSection & | section | ||

| ) |

| Darknet::ONNXExport & Darknet::ONNXExport::add_node_bn | ( | const size_t | index, |

| Darknet::CfgSection & | section | ||

| ) |

| Darknet::ONNXExport & Darknet::ONNXExport::add_node_conv | ( | const size_t | index, |

| Darknet::CfgSection & | section | ||

| ) |

| Darknet::ONNXExport & Darknet::ONNXExport::add_node_maxpool | ( | const size_t | index, |

| Darknet::CfgSection & | section | ||

| ) |

| Darknet::ONNXExport & Darknet::ONNXExport::add_node_resize | ( | const size_t | index, |

| Darknet::CfgSection & | section | ||

| ) |

| Darknet::ONNXExport & Darknet::ONNXExport::add_node_route_concat | ( | const size_t | index, |

| Darknet::CfgSection & | section | ||

| ) |

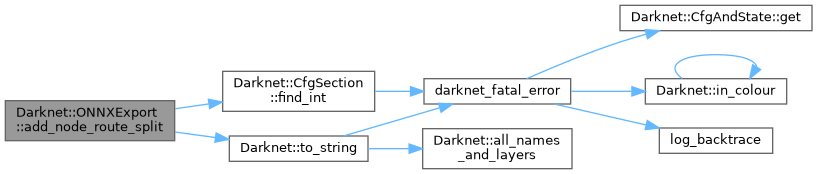

| Darknet::ONNXExport & Darknet::ONNXExport::add_node_route_split | ( | const size_t | index, |

| Darknet::CfgSection & | section | ||

| ) |

<

| Darknet::ONNXExport & Darknet::ONNXExport::add_node_shortcut | ( | const size_t | index, |

| Darknet::CfgSection & | section | ||

| ) |

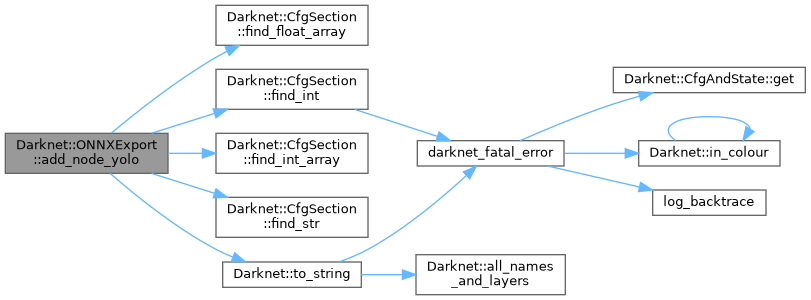

| Darknet::ONNXExport & Darknet::ONNXExport::add_node_yolo | ( | const size_t | index, |

| Darknet::CfgSection & | section | ||

| ) |

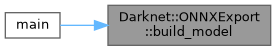

| Darknet::ONNXExport & Darknet::ONNXExport::build_model | ( | ) |

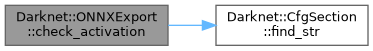

| Darknet::ONNXExport & Darknet::ONNXExport::check_activation | ( | const size_t | index, |

| Darknet::CfgSection & | section | ||

| ) |

| Darknet::ONNXExport & Darknet::ONNXExport::display_summary | ( | ) |

Display some general information about the protocol buffer model.

| Darknet::ONNXExport & Darknet::ONNXExport::initialize_model | ( | ) |

| Darknet::ONNXExport & Darknet::ONNXExport::load_network | ( | ) |

Use Darknet to load the neural network.

|

static |

Callback function that Protocol Buffers calls to log messages.

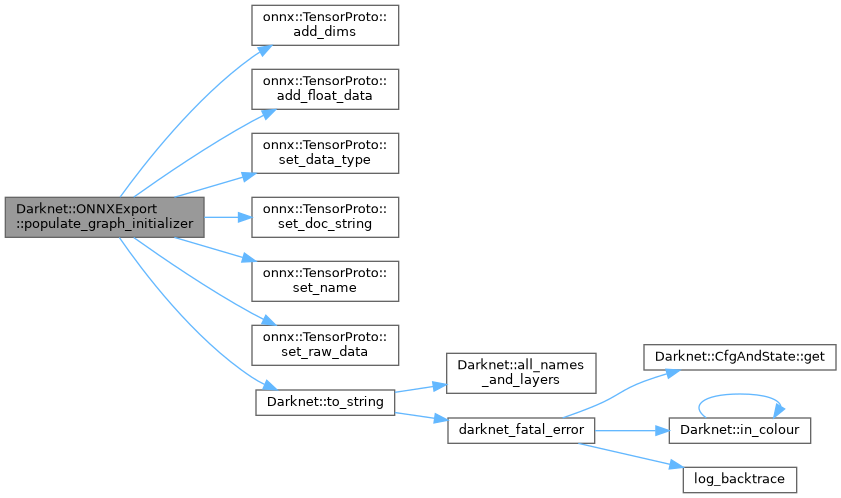

| Darknet::ONNXExport & Darknet::ONNXExport::populate_graph_initializer | ( | const float * | f, |

| const size_t | n, | ||

| const size_t | idx, | ||

| const Darknet::Layer & | l, | ||

| const std::string & | name, | ||

| const bool | simple = false |

||

| ) |

| Darknet::ONNXExport & Darknet::ONNXExport::populate_graph_input_frame | ( | ) |

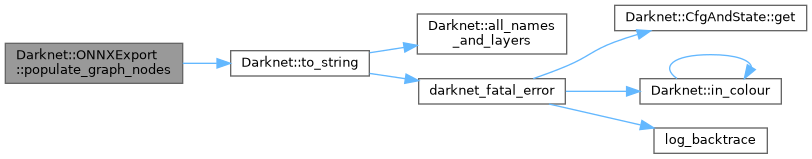

| Darknet::ONNXExport & Darknet::ONNXExport::populate_graph_nodes | ( | ) |

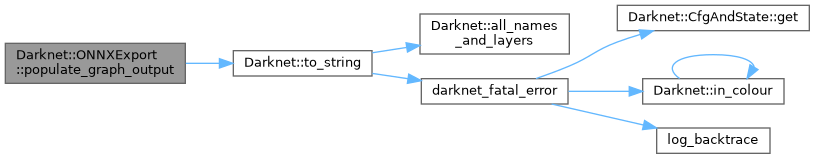

| Darknet::ONNXExport & Darknet::ONNXExport::populate_graph_output | ( | ) |

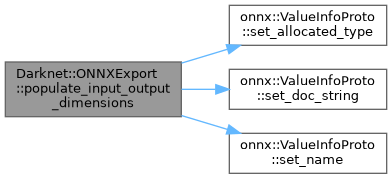

| Darknet::ONNXExport & Darknet::ONNXExport::populate_input_output_dimensions | ( | onnx::ValueInfoProto * | proto, |

| const std::string & | name, | ||

| const int | v1, | ||

| const int | v2 = -1, |

||

| const int | v3 = -1, |

||

| const int | v4 = -1, |

||

| const size_t | line_number = 0 |

||

| ) |

| Darknet::ONNXExport & Darknet::ONNXExport::save_output_file | ( | ) |

Save the entire model as an .onnx file.

| Darknet::CfgFile Darknet::ONNXExport::cfg |

| std::filesystem::path Darknet::ONNXExport::cfg_fn |

| bool Darknet::ONNXExport::fuse_batchnorm |

Whether or not we need to fuse batchnorm (fuse and dontfuse on the CLI).

| onnx::GraphProto* Darknet::ONNXExport::graph |

| std::string Darknet::ONNXExport::input_string |

The dimensions used in populate_graph_input_frame().

| onnx::ModelProto Darknet::ONNXExport::model |

| std::map<int, std::string> Darknet::ONNXExport::most_recent_output_per_index |

Keep track of the single most recent output name for each of the layers.

| std::map<std::string, size_t> Darknet::ONNXExport::number_of_floats_exported |

The key is the last part of the string, and the value is the number of floats.

For example, for "000_conv_bias", we store the key as "bias".

| std::filesystem::path Darknet::ONNXExport::onnx_fn |

| int Darknet::ONNXExport::opset_version |

Which opset version to use (10, 18, ...)?

| std::string Darknet::ONNXExport::output_string |

The output nodes for this neural network.

| std::filesystem::path Darknet::ONNXExport::weights_fn |