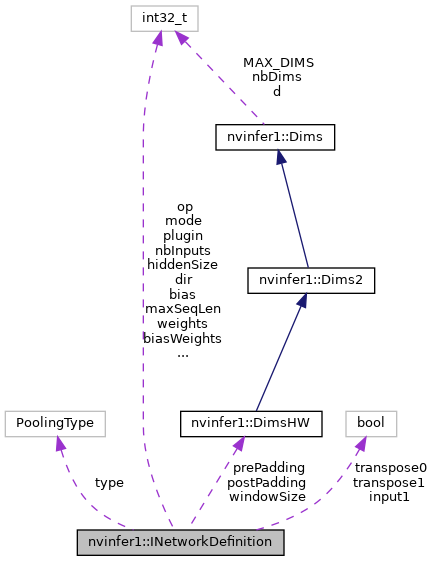

A network definition for input to the builder. More...

Public Member Functions | |

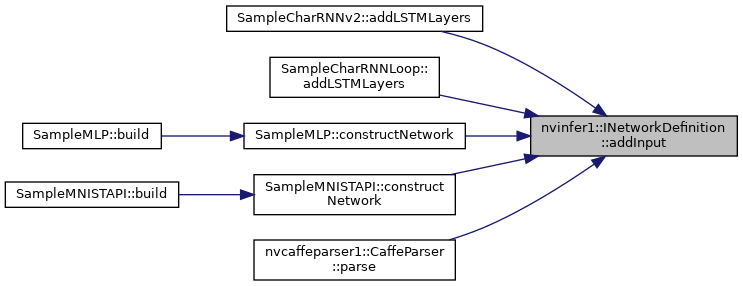

| virtual ITensor * | addInput (const char *name, DataType type, Dims dimensions)=0 |

| Add an input tensor to the network. More... | |

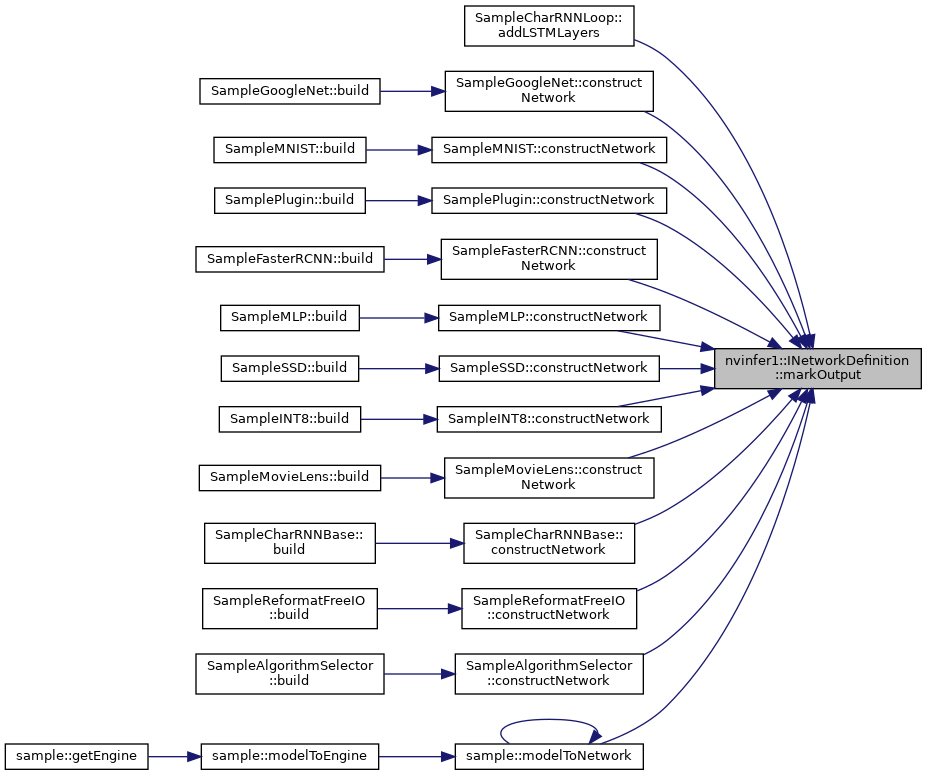

| virtual void | markOutput (ITensor &tensor)=0 |

| Mark a tensor as a network output. More... | |

| __attribute__ ((deprecated)) virtual IConvolutionLayer *addConvolution(ITensor &input | |

| Add a convolution layer to the network. More... | |

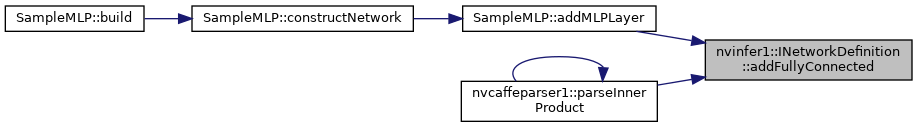

| virtual IFullyConnectedLayer * | addFullyConnected (ITensor &input, int32_t nbOutputs, Weights kernelWeights, Weights biasWeights)=0 |

| Add a fully connected layer to the network. More... | |

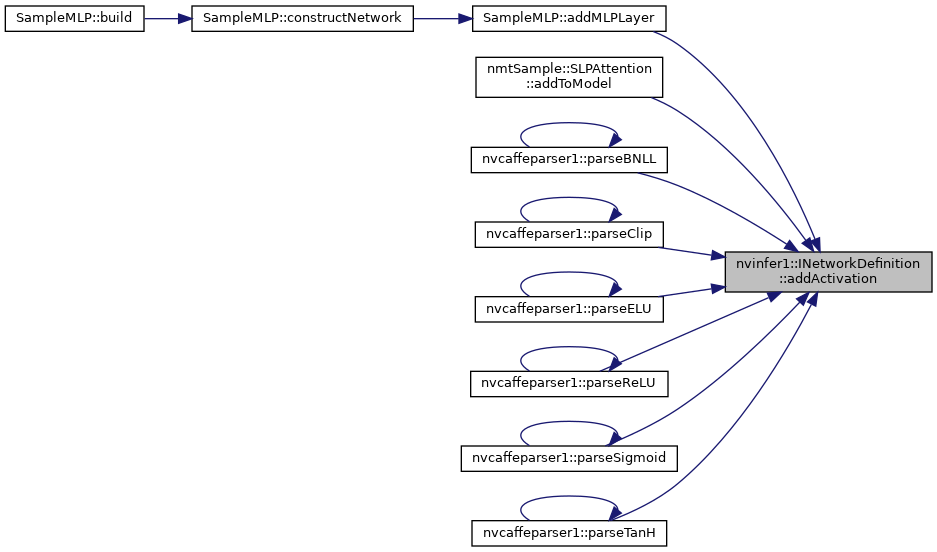

| virtual IActivationLayer * | addActivation (ITensor &input, ActivationType type)=0 |

| Add an activation layer to the network. More... | |

| __attribute__ ((deprecated)) virtual IPoolingLayer *addPooling(ITensor &input | |

| Add a pooling layer to the network. More... | |

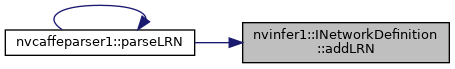

| virtual ILRNLayer * | addLRN (ITensor &input, int32_t window, float alpha, float beta, float k)=0 |

| Add a LRN layer to the network. More... | |

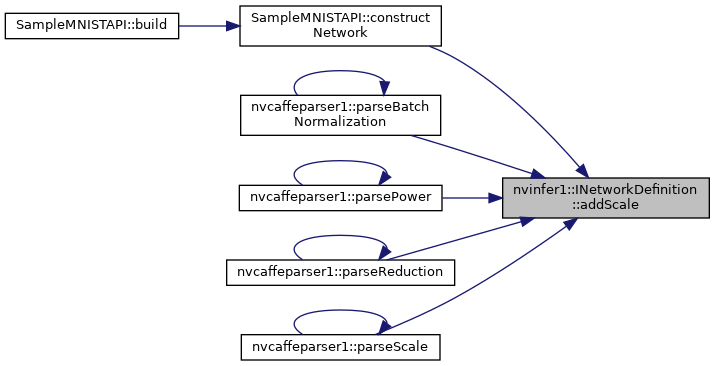

| virtual IScaleLayer * | addScale (ITensor &input, ScaleMode mode, Weights shift, Weights scale, Weights power)=0 |

| Add a Scale layer to the network. More... | |

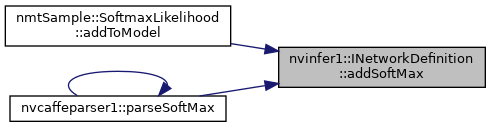

| virtual ISoftMaxLayer * | addSoftMax (ITensor &input)=0 |

| Add a SoftMax layer to the network. More... | |

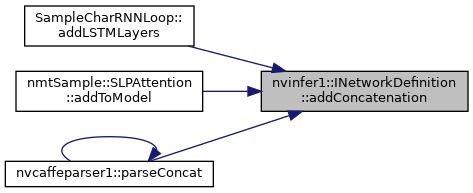

| virtual IConcatenationLayer * | addConcatenation (ITensor *const *inputs, int32_t nbInputs)=0 |

| Add a concatenation layer to the network. More... | |

| __attribute__ ((deprecated)) virtual IDeconvolutionLayer *addDeconvolution(ITensor &input | |

| Add a deconvolution layer to the network. More... | |

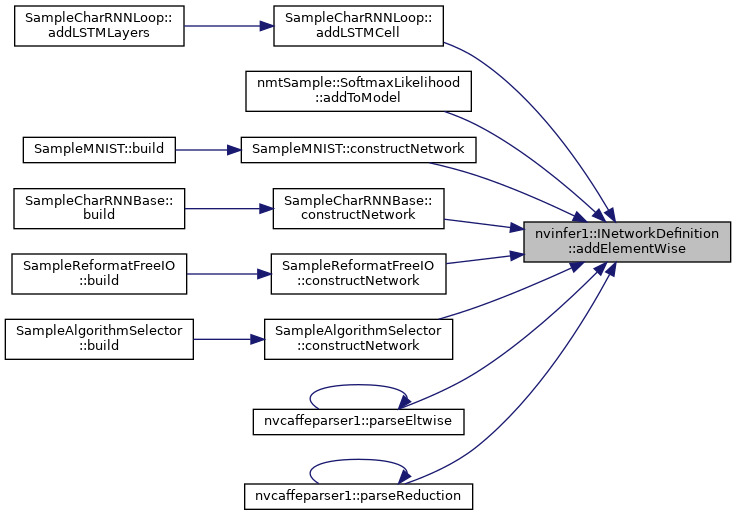

| virtual IElementWiseLayer * | addElementWise (ITensor &input1, ITensor &input2, ElementWiseOperation op)=0 |

| Add an elementwise layer to the network. More... | |

| __attribute__ ((deprecated)) virtual IRNNLayer *addRNN(ITensor &inputs | |

Add an layerCount deep RNN layer to the network with a sequence length of maxSeqLen and hiddenSize internal state per layer. More... | |

| __attribute__ ((deprecated)) virtual IPluginLayer *addPlugin(ITensor *const *inputs | |

| Add a plugin layer to the network. More... | |

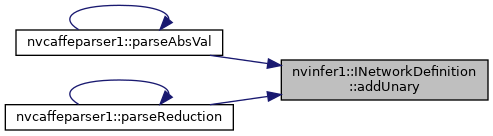

| virtual IUnaryLayer * | addUnary (ITensor &input, UnaryOperation operation)=0 |

| Add a unary layer to the network. More... | |

| __attribute__ ((deprecated)) virtual IPaddingLayer *addPadding(ITensor &input | |

| Add a padding layer to the network. More... | |

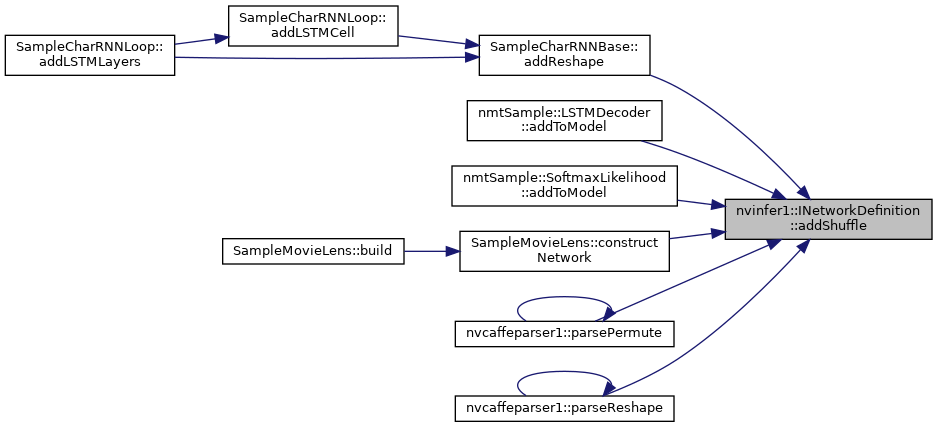

| virtual IShuffleLayer * | addShuffle (ITensor &input)=0 |

| Add a shuffle layer to the network. More... | |

| __attribute__ ((deprecated)) virtual void setPoolingOutputDimensionsFormula(IOutputDimensionsFormula *formula)=0 | |

| Set the pooling output dimensions formula. More... | |

| __attribute__ ((deprecated)) virtual IOutputDimensionsFormula &getPoolingOutputDimensionsFormula() const =0 | |

| Get the pooling output dimensions formula. More... | |

| __attribute__ ((deprecated)) virtual void setConvolutionOutputDimensionsFormula(IOutputDimensionsFormula *formula)=0 | |

| Set the convolution output dimensions formula. More... | |

| __attribute__ ((deprecated)) virtual IOutputDimensionsFormula &getConvolutionOutputDimensionsFormula() const =0 | |

| Get the convolution output dimensions formula. More... | |

| __attribute__ ((deprecated)) virtual void setDeconvolutionOutputDimensionsFormula(IOutputDimensionsFormula *formula)=0 | |

| Set the deconvolution output dimensions formula. More... | |

| __attribute__ ((deprecated)) virtual IOutputDimensionsFormula &getDeconvolutionOutputDimensionsFormula() const =0 | |

| Get the deconvolution output dimensions formula. More... | |

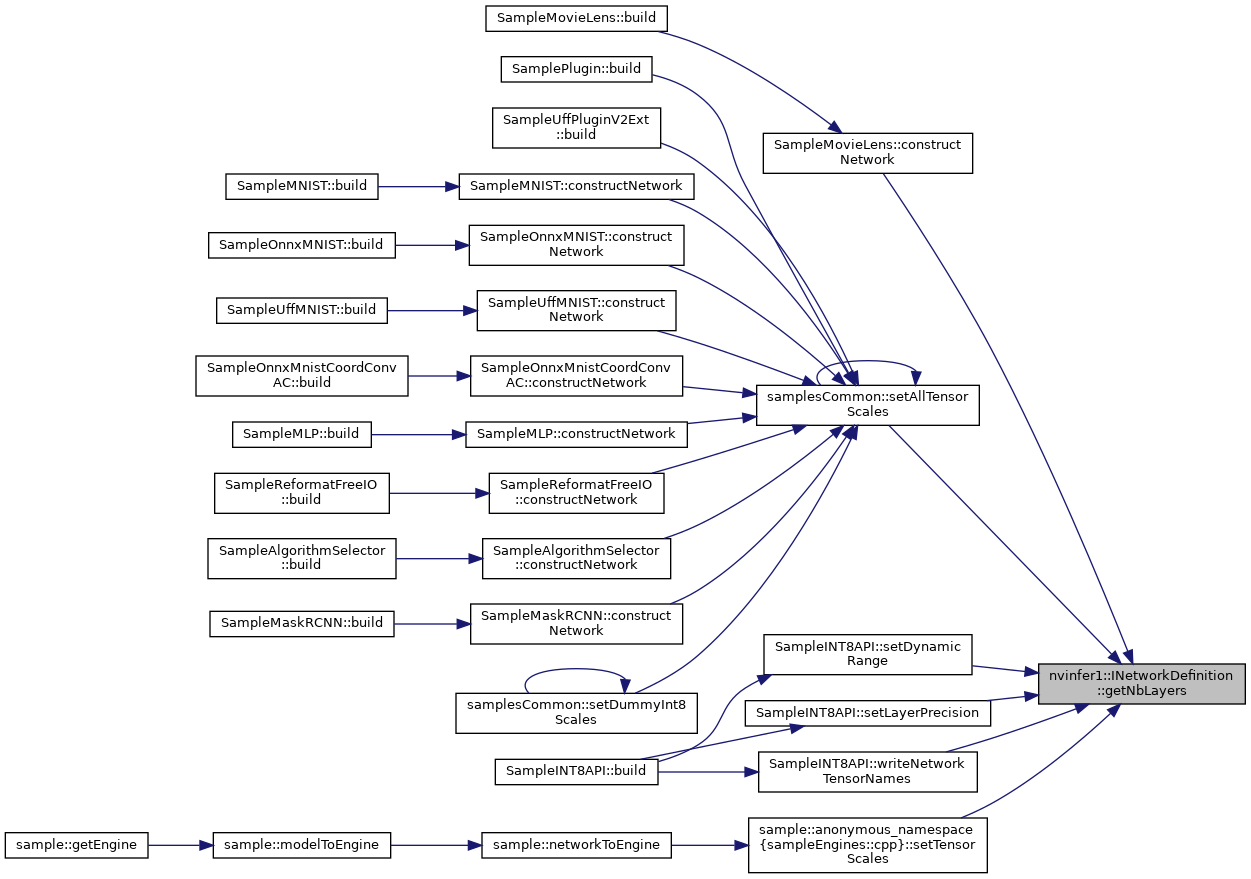

| virtual int32_t | getNbLayers () const =0 |

| Get the number of layers in the network. More... | |

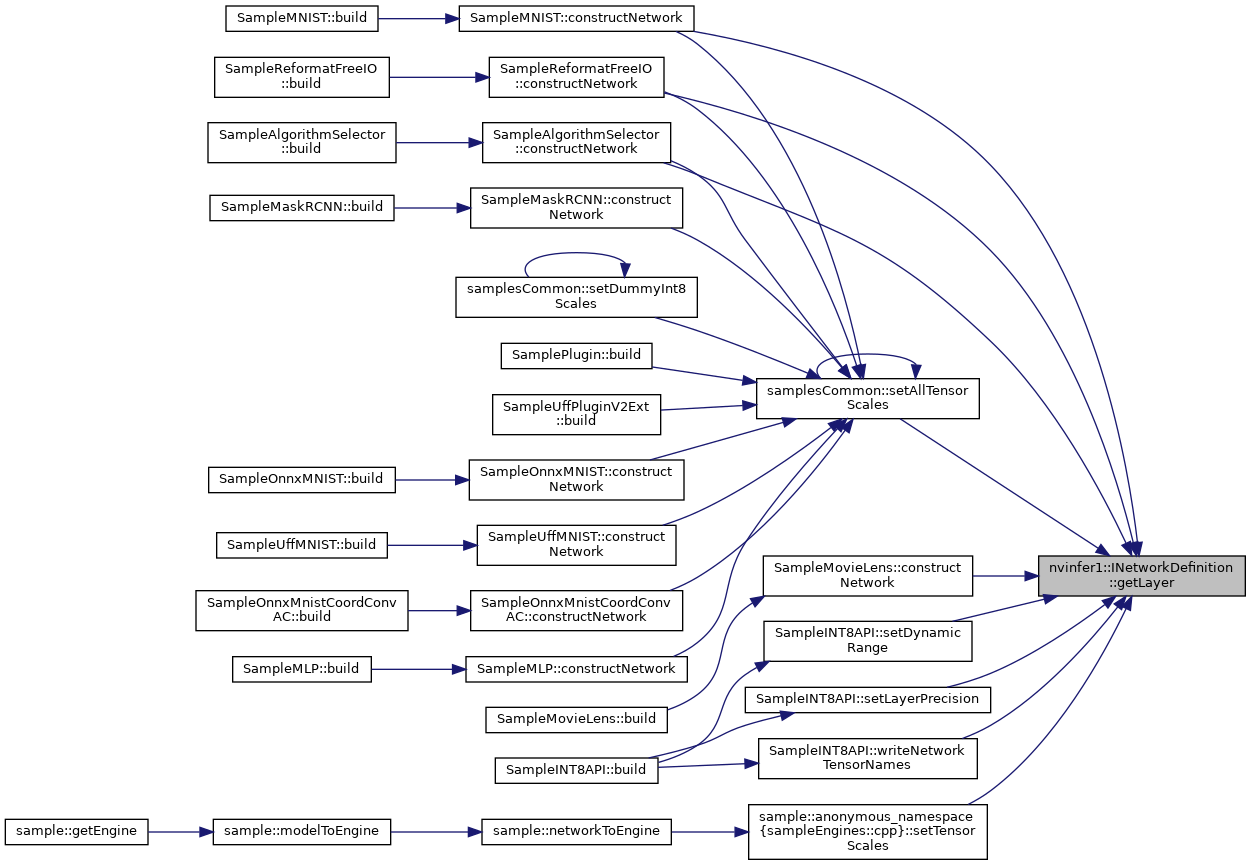

| virtual ILayer * | getLayer (int32_t index) const =0 |

| Get the layer specified by the given index. More... | |

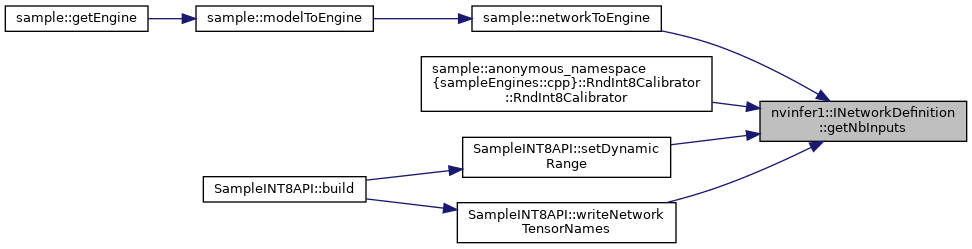

| virtual int32_t | getNbInputs () const =0 |

| Get the number of inputs in the network. More... | |

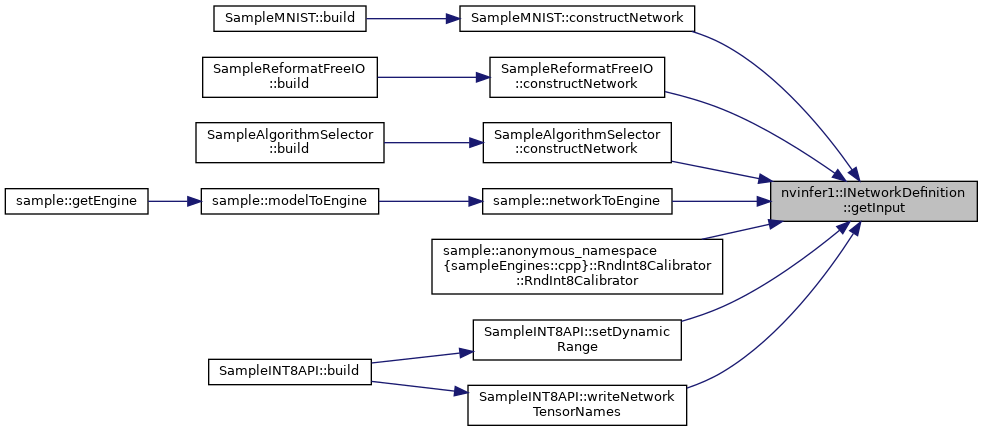

| virtual ITensor * | getInput (int32_t index) const =0 |

| Get the input tensor specified by the given index. More... | |

| virtual int32_t | getNbOutputs () const =0 |

| Get the number of outputs in the network. More... | |

| virtual ITensor * | getOutput (int32_t index) const =0 |

| Get the output tensor specified by the given index. More... | |

| virtual void | destroy ()=0 |

| Destroy this INetworkDefinition object. More... | |

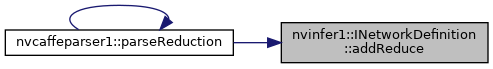

| virtual IReduceLayer * | addReduce (ITensor &input, ReduceOperation operation, uint32_t reduceAxes, bool keepDimensions)=0 |

| Add a reduce layer to the network. More... | |

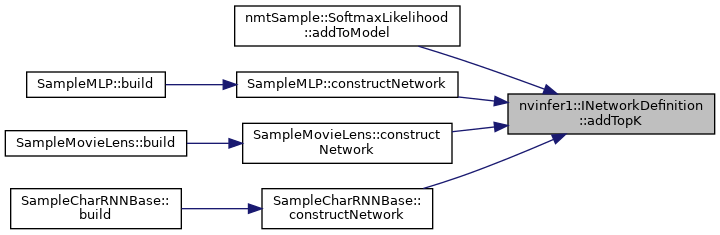

| virtual ITopKLayer * | addTopK (ITensor &input, TopKOperation op, int32_t k, uint32_t reduceAxes)=0 |

| Add a TopK layer to the network. More... | |

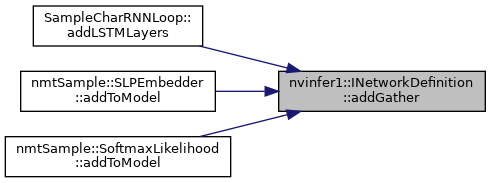

| virtual IGatherLayer * | addGather (ITensor &data, ITensor &indices, int32_t axis)=0 |

| Add a gather layer to the network. More... | |

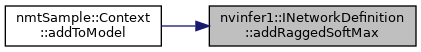

| virtual IRaggedSoftMaxLayer * | addRaggedSoftMax (ITensor &input, ITensor &bounds)=0 |

| Add a RaggedSoftMax layer to the network. More... | |

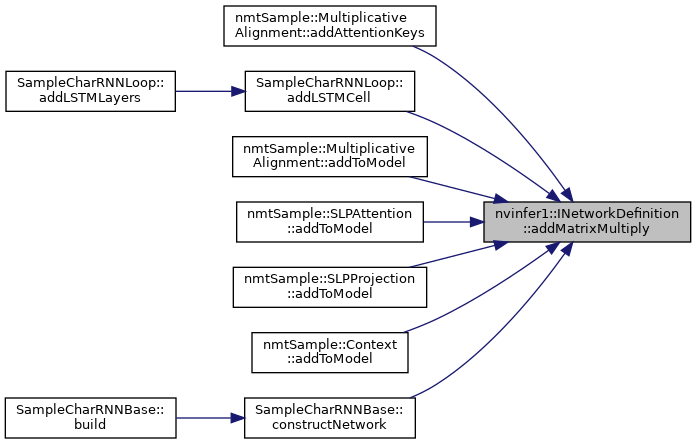

| virtual IMatrixMultiplyLayer * | addMatrixMultiply (ITensor &input0, MatrixOperation op0, ITensor &input1, MatrixOperation op1)=0 |

| Add a MatrixMultiply layer to the network. More... | |

| __attribute__ ((deprecated)) virtual IMatrixMultiplyLayer *addMatrixMultiply(ITensor &input0 | |

| Add a MatrixMultiply layer to the network. More... | |

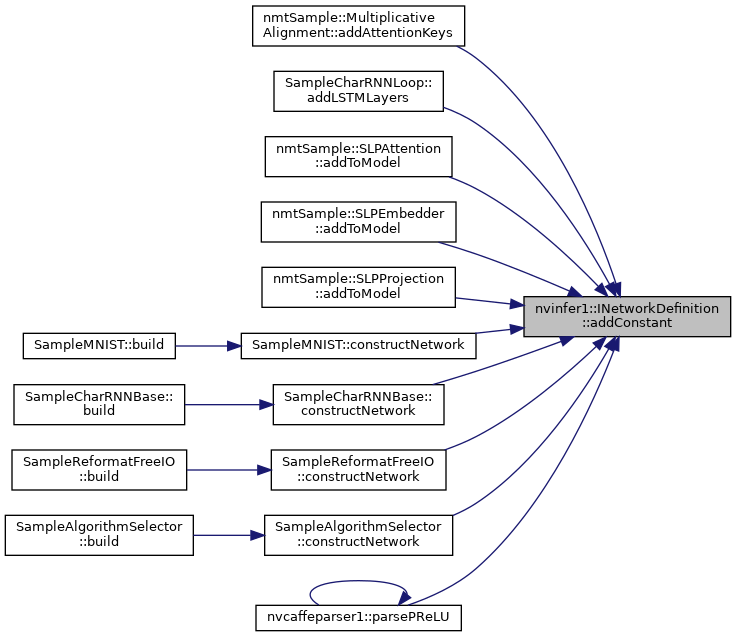

| virtual IConstantLayer * | addConstant (Dims dimensions, Weights weights)=0 |

| Add a constant layer to the network. More... | |

| __attribute__ ((deprecated)) virtual IRNNv2Layer *addRNNv2(ITensor &input | |

Add an layerCount deep RNN layer to the network with hiddenSize internal states that can take a batch with fixed or variable sequence lengths. More... | |

| __attribute__ ((deprecated)) virtual IPluginLayer *addPluginExt(ITensor *const *inputs | |

| Add a plugin layer to the network using an IPluginExt interface. More... | |

| virtual IIdentityLayer * | addIdentity (ITensor &input)=0 |

| Add an identity layer. More... | |

| virtual void | removeTensor (ITensor &tensor)=0 |

| remove a tensor from the network definition. More... | |

| virtual void | unmarkOutput (ITensor &tensor)=0 |

| unmark a tensor as a network output. More... | |

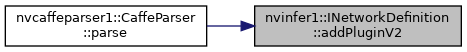

| virtual IPluginV2Layer * | addPluginV2 (ITensor *const *inputs, int32_t nbInputs, IPluginV2 &plugin)=0 |

| Add a plugin layer to the network using the IPluginV2 interface. More... | |

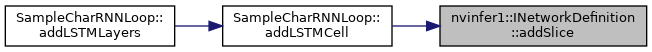

| virtual ISliceLayer * | addSlice (ITensor &input, Dims start, Dims size, Dims stride)=0 |

| Add a slice layer to the network. More... | |

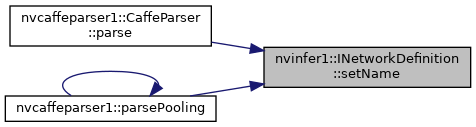

| virtual void | setName (const char *name)=0 |

| Sets the name of the network. More... | |

| virtual const char * | getName () const =0 |

| Returns the name associated with the network. More... | |

| virtual IShapeLayer * | addShape (ITensor &input)=0 |

| Add a shape layer to the network. More... | |

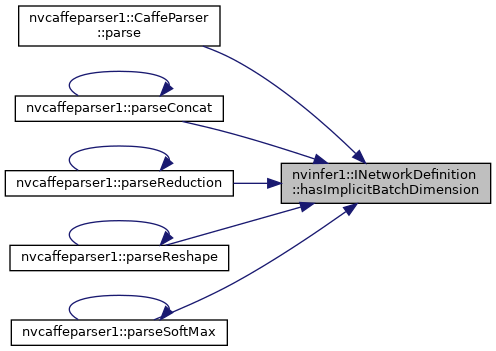

| virtual bool | hasImplicitBatchDimension () const =0 |

| Query whether the network was created with an implicit batch dimension. More... | |

| virtual bool | markOutputForShapes (ITensor &tensor)=0 |

| Enable tensor's value to be computed by IExecutionContext::getShapeBinding. More... | |

| virtual bool | unmarkOutputForShapes (ITensor &tensor)=0 |

| Undo markOutputForShapes. More... | |

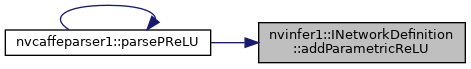

| virtual IParametricReLULayer * | addParametricReLU (ITensor &input, ITensor &slope) noexcept=0 |

| Add a parametric ReLU layer to the network. More... | |

| virtual IConvolutionLayer * | addConvolutionNd (ITensor &input, int32_t nbOutputMaps, Dims kernelSize, Weights kernelWeights, Weights biasWeights)=0 |

| Add a multi-dimension convolution layer to the network. More... | |

| virtual IPoolingLayer * | addPoolingNd (ITensor &input, PoolingType type, Dims windowSize)=0 |

| Add a multi-dimension pooling layer to the network. More... | |

| virtual IDeconvolutionLayer * | addDeconvolutionNd (ITensor &input, int32_t nbOutputMaps, Dims kernelSize, Weights kernelWeights, Weights biasWeights)=0 |

| Add a multi-dimension deconvolution layer to the network. More... | |

| virtual IScaleLayer * | addScaleNd (ITensor &input, ScaleMode mode, Weights shift, Weights scale, Weights power, int32_t channelAxis)=0 |

| Add a multi-dimension scale layer to the network. More... | |

| virtual IResizeLayer * | addResize (ITensor &input)=0 |

| Add a resize layer to the network. More... | |

| virtual bool | hasExplicitPrecision () const =0 |

| True if network is an explicit precision network. More... | |

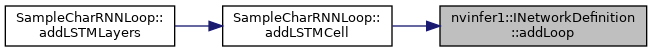

| virtual ILoop * | addLoop () noexcept=0 |

| Add a loop to the network. More... | |

| virtual ISelectLayer * | addSelect (ITensor &condition, ITensor &thenInput, ITensor &elseInput)=0 |

| Add a select layer to the network. More... | |

| virtual IFillLayer * | addFill (Dims dimensions, FillOperation op) noexcept=0 |

| Add a fill layer to the network. More... | |

| virtual IPaddingLayer * | addPaddingNd (ITensor &input, Dims prePadding, Dims postPadding)=0 |

| Add a padding layer to the network. More... | |

Public Attributes | |

| int32_t | nbOutputMaps |

| int32_t DimsHW | kernelSize |

| int32_t DimsHW Weights | kernelWeights |

| int32_t DimsHW Weights Weights | biasWeights = 0 |

| PoolingType | type |

| PoolingType DimsHW | windowSize = 0 |

| int32_t | layerCount |

| int32_t std::size_t | hiddenSize |

| int32_t std::size_t int32_t | maxSeqLen |

| int32_t std::size_t int32_t RNNOperation | op |

| int32_t std::size_t int32_t RNNOperation RNNInputMode | mode |

| int32_t std::size_t int32_t RNNOperation RNNInputMode RNNDirection | dir |

| int32_t std::size_t int32_t RNNOperation RNNInputMode RNNDirection Weights | weights |

| int32_t std::size_t int32_t RNNOperation RNNInputMode RNNDirection Weights Weights | bias = 0 |

| int32_t | nbInputs |

| int32_t IPlugin & | plugin = 0 |

| DimsHW | prePadding |

| DimsHW DimsHW | postPadding = 0 |

| bool | transpose0 |

| bool ITensor & | input1 |

| bool ITensor bool | transpose1 = 0 |

| int32_t int32_t | hiddenSize |

| int32_t int32_t int32_t | maxSeqLen |

| int32_t int32_t int32_t RNNOperation | op = 0 |

| int32_t IPluginExt & | plugin = 0 |

Protected Member Functions | |

| virtual | ~INetworkDefinition () |

A network definition for input to the builder.

A network definition defines the structure of the network, and combined with a IBuilderConfig, is built into an engine using an IBuilder. An INetworkDefinition can either have an implicit batch dimensions, specified at runtime, or all dimensions explicit, full dims mode, in the network definition. When a network has been created using createNetwork(), only implicit batch size mode is supported. The function hasImplicitBatchSize() is used to query the mode of the network.

A network with implicit batch dimensions returns the dimensions of a layer without the implicit dimension, and instead the batch is specified at execute/enqueue time. If the network has all dimensions specified, then the first dimension follows elementwise broadcast rules: if it is 1 for some inputs and is some value N for all other inputs, then the first dimension of each outut is N, and the inputs with 1 for the first dimension are broadcast. Having divergent batch sizes across inputs to a layer is not supported.

|

inlineprotectedvirtual |

|

pure virtual |

Add an input tensor to the network.

The name of the input tensor is used to find the index into the buffer array for an engine built from the network. The volume of the dimensions must be less than 2^30 elements. For networks with an implicit batch dimension, this volume includes the batch dimension with its length set to the maximum batch size. For networks with all explicit dimensions and with wildcard dimensions, the volume is based on the maxima specified by an IOptimizationProfile.Dimensions are normally non-negative integers. The exception is that in networks with all explicit dimensions, -1 can be used as a wildcard for a dimension to be specified at runtime. Input tensors with such a wildcard must have a corresponding entry in the IOptimizationProfiles indicating the permitted extrema, and the input dimensions must be set by IExecutionContext::setBindingDimensions. Different IExecutionContext instances can have different dimensions. Wildcard dimensions are only supported for EngineCapability::kDEFAULT. They are not supported in safety contexts. DLA does not support Wildcard dimensions.

Tensor dimensions are specified independent of format. For example, if a tensor is formatted in "NHWC" or a vectorized format, the dimensions are still specified in the order{N, C, H, W}. For 2D images with a channel dimension, the last three dimensions are always {C,H,W}. For 3D images with a channel dimension, the last four dimensions are always {C,D,H,W}.

| name | The name of the tensor. |

| type | The type of the data held in the tensor. |

| dimensions | The dimensions of the tensor. |

|

pure virtual |

Mark a tensor as a network output.

| tensor | The tensor to mark as an output tensor. |

| nvinfer1::INetworkDefinition::__attribute__ | ( | (deprecated) | ) | & |

Add a convolution layer to the network.

| input | The input tensor to the convolution. |

| nbOutputMaps | The number of output feature maps for the convolution. |

| kernelSize | The HW-dimensions of the convolution kernel. |

| kernelWeights | The kernel weights for the convolution. |

| biasWeights | The optional bias weights for the convolution. |

|

pure virtual |

Add a fully connected layer to the network.

| input | The input tensor to the layer. |

| nbOutputs | The number of outputs of the layer. |

| kernelWeights | The kernel weights for the fully connected layer. |

| biasWeights | The optional bias weights for the fully connected layer. |

|

pure virtual |

Add an activation layer to the network.

| input | The input tensor to the layer. |

| type | The type of activation function to apply. |

Note that the setAlpha() and setBeta() methods must be used on the output for activations that require these parameters.

| nvinfer1::INetworkDefinition::__attribute__ | ( | (deprecated) | ) | & |

Add a pooling layer to the network.

| input | The input tensor to the layer. |

| type | The type of pooling to apply. |

| windowSize | The size of the pooling window. |

|

pure virtual |

Add a LRN layer to the network.

| input | The input tensor to the layer. |

| window | The size of the window. |

| alpha | The alpha value for the LRN computation. |

| beta | The beta value for the LRN computation. |

| k | The k value for the LRN computation. |

|

pure virtual |

Add a Scale layer to the network.

| input | The input tensor to the layer. This tensor is required to have a minimum of 3 dimensions. |

| mode | The scaling mode. |

| shift | The shift value. |

| scale | The scale value. |

| power | The power value. |

If the weights are available, then the size of weights are dependent on the ScaleMode. For ::kUNIFORM, the number of weights equals 1. For ::kCHANNEL, the number of weights equals the channel dimension. For ::kELEMENTWISE, the number of weights equals the product of the last three dimensions of the input.

|

pure virtual |

Add a SoftMax layer to the network.

|

pure virtual |

Add a concatenation layer to the network.

| inputs | The input tensors to the layer. |

| nbInputs | The number of input tensors. |

| nvinfer1::INetworkDefinition::__attribute__ | ( | (deprecated) | ) | & |

Add a deconvolution layer to the network.

| input | The input tensor to the layer. |

| nbOutputMaps | The number of output feature maps. |

| kernelSize | The HW-dimensions of the deconvolution kernel. |

| kernelWeights | The kernel weights for the deconvolution. |

| biasWeights | The optional bias weights for the deconvolution. |

|

pure virtual |

Add an elementwise layer to the network.

| input1 | The first input tensor to the layer. |

| input2 | The second input tensor to the layer. |

| op | The binary operation that the layer applies. |

The input tensors must have the same number of dimensions. For each dimension, their lengths must match, or one of them must be one. In the latter case, the tensor is broadcast along that axis.

The output tensor has the same number of dimensions as the inputs. For each dimension, its length is the maximum of the lengths of the corresponding input dimension.

| nvinfer1::INetworkDefinition::__attribute__ | ( | (deprecated) | ) | & |

Add an layerCount deep RNN layer to the network with a sequence length of maxSeqLen and hiddenSize internal state per layer.

| inputs | The input tensor to the layer. |

| layerCount | The number of layers in the RNN. |

| hiddenSize | The size of the internal hidden state for each layer. |

| maxSeqLen | The maximum length of the time sequence. |

| op | The type of RNN to execute. |

| mode | The input mode for the RNN. |

| dir | The direction to run the RNN. |

| weights | The weights for the weight matrix parameters of the RNN. |

| bias | The weights for the bias vectors parameters of the RNN. |

The inputs tensor must be of the type DataType::kFLOAT or DataType::kHALF, and have non-zero volume.

See IRNNLayer::setWeights() and IRNNLayer::setBias() for details on the required input format for weights and bias.

The layout for the input tensor should be {1, S_max, N, E}, where:

S_max is the maximum allowed sequence length (number of RNN iterations)N is the batch sizeE specifies the embedding length (unless ::kSKIP is set, in which case it should match getHiddenSize()).The first output tensor is the output of the final RNN layer across all timesteps, with dimensions {S_max, N, H}:

S_max is the maximum allowed sequence length (number of RNN iterations)N is the batch sizeH is an output hidden state (equal to getHiddenSize() or 2x getHiddenSize())The second tensor is the final hidden state of the RNN across all layers, and if the RNN is an LSTM (i.e. getOperation() is ::kLSTM), then the third tensor is the final cell state of the RNN across all layers. Both the second and third output tensors have dimensions {L, N, H}:

L is equal to getLayerCount() if getDirection is ::kUNIDIRECTION, and 2*getLayerCount() if getDirection is ::kBIDIRECTION. In the bi-directional case, layer l's final forward hidden state is stored in L = 2*l, and final backward hidden state is stored in L = 2*l + 1.N is the batch sizeH is getHiddenSize().Note that in bidirectional RNNs, the full "hidden state" for a layer l is the concatenation of its forward hidden state and its backward hidden state, and its size is 2*H.

| nvinfer1::INetworkDefinition::__attribute__ | ( | (deprecated) | ) | const |

Add a plugin layer to the network.

| inputs | The input tensors to the layer. |

| nbInputs | The number of input tensors. |

| plugin | The layer plugin. |

|

pure virtual |

Add a unary layer to the network.

| input | The input tensor to the layer. |

| operation | The operation to apply. |

| nvinfer1::INetworkDefinition::__attribute__ | ( | (deprecated) | ) | & |

Add a padding layer to the network.

| input | The input tensor to the layer. |

| prePadding | The padding to apply to the start of the tensor. |

| postPadding | The padding to apply to the end of the tensor. |

|

pure virtual |

Add a shuffle layer to the network.

| input | The input tensor to the layer. |

|

pure virtual |

Set the pooling output dimensions formula.

| formula | The formula from computing the pooling output dimensions. If null is passed, the default formula is used. |

The default formula in each dimension is (inputDim + padding * 2 - kernelSize) / stride + 1.

|

pure virtual |

Get the pooling output dimensions formula.

|

pure virtual |

Set the convolution output dimensions formula.

| formula | The formula from computing the convolution output dimensions. If null is passed, the default formula is used. |

The default formula in each dimension is (inputDim + padding * 2 - kernelSize) / stride + 1.

|

pure virtual |

Get the convolution output dimensions formula.

|

pure virtual |

Set the deconvolution output dimensions formula.

| formula | The formula from computing the deconvolution output dimensions. If null is passed, the default! formula is used. |

The default formula in each dimension is (inputDim - 1) * stride + kernelSize - 2 * padding.

|

pure virtual |

Get the deconvolution output dimensions formula.

|

pure virtual |

Get the number of layers in the network.

|

pure virtual |

Get the layer specified by the given index.

| index | The index of the layer. |

|

pure virtual |

Get the number of inputs in the network.

|

pure virtual |

Get the input tensor specified by the given index.

| index | The index of the input tensor. |

|

pure virtual |

Get the number of outputs in the network.

The outputs include those marked by markOutput or markOutputForShapes.

|

pure virtual |

Get the output tensor specified by the given index.

| index | The index of the output tensor. |

|

pure virtual |

Destroy this INetworkDefinition object.

|

pure virtual |

Add a reduce layer to the network.

| input | The input tensor to the layer. |

| operation | The reduction operation to perform. |

| reduceAxes | The reduction dimensions. The bit in position i of bitmask reduceAxes corresponds to explicit dimension i if result. E.g., the least significant bit corresponds to the first explicit dimension and the next to least significant bit corresponds to the second explicit dimension. |

| keepDimensions | The boolean that specifies whether or not to keep the reduced dimensions in the output of the layer. |

The reduce layer works by performing an operation specified by operation to reduce the tensor input across the axes specified by reduceAxes.

|

pure virtual |

Add a TopK layer to the network.

The TopK layer has two outputs of the same dimensions. The first contains data values, the second contains index positions for the values. Output values are sorted, largest first for operation kMAX and smallest first for operation kMIN.

Currently only values of K up to 1024 are supported.

| input | The input tensor to the layer. |

| op | Operation to perform. |

| k | Number of elements to keep. |

| reduceAxes | The reduction dimensions. The bit in position i of bitmask reduceAxes corresponds to explicit dimension i of the result. E.g., the least significant bit corresponds to the first explicit dimension and the next to least significant bit corresponds to the second explicit dimension. |

Currently reduceAxes must specify exactly one dimension, and it must be one of the last four dimensions.

|

pure virtual |

Add a gather layer to the network.

| data | The tensor to gather values from. |

| indices | The tensor to get indices from to populate the output tensor. |

| axis | The axis in the data tensor to gather on. |

|

pure virtual |

Add a RaggedSoftMax layer to the network.

| input | The ZxS input tensor. |

| bounds | The Zx1 bounds tensor. |

|

pure virtual |

Add a MatrixMultiply layer to the network.

| input0 | The first input tensor (commonly A). |

| op0 | The operation to apply to input0. |

| input1 | The second input tensor (commonly B). |

| op1 | The operation to apply to input1. |

| nvinfer1::INetworkDefinition::__attribute__ | ( | (deprecated) | ) | & |

Add a MatrixMultiply layer to the network.

| input0 | The first input tensor (commonly A). |

| transpose0 | If true, op(input0)=transpose(input0), else op(input0)=input0. |

| input1 | The second input tensor (commonly B). |

| transpose1 | If true, op(input1)=transpose(input1), else op(input1)=input1. |

|

pure virtual |

Add a constant layer to the network.

| dimensions | The dimensions of the constant. |

| weights | The constant value, represented as weights. |

If weights.type is DataType::kINT32, the output is a tensor of 32-bit indices. Otherwise the output is a tensor of real values and the output type will be follow TensorRT's normal precision rules.

If tensors in the network have an implicit batch dimension, the constant is broadcast over that dimension.

If a wildcard dimension is used, the volume of the runtime dimensions must equal the number of weights specified.

| nvinfer1::INetworkDefinition::__attribute__ | ( | (deprecated) | ) | & |

Add an layerCount deep RNN layer to the network with hiddenSize internal states that can take a batch with fixed or variable sequence lengths.

| input | The input tensor to the layer (see below). |

| layerCount | The number of layers in the RNN. |

| hiddenSize | Size of the internal hidden state for each layer. |

| maxSeqLen | Maximum sequence length for the input. |

| op | The type of RNN to execute. |

By default, the layer is configured with RNNDirection::kUNIDIRECTION and RNNInputMode::kLINEAR. To change these settings, use IRNNv2Layer::setDirection() and IRNNv2Layer::setInputMode().

Weights and biases for the added layer should be set using IRNNv2Layer::setWeightsForGate() and IRNNv2Layer::setBiasForGate() prior to building an engine using this network.

The input tensors must be of the type DataType::kFLOAT or DataType::kHALF. The layout of the weights is row major and must be the same datatype as the input tensor. weights contain 8 matrices and bias contains 8 vectors.

See IRNNv2Layer::setWeightsForGate() and IRNNv2Layer::setBiasForGate() for details on the required input format for weights and bias.

The input ITensor should contain zero or more index dimensions {N1, ..., Np}, followed by two dimensions, defined as follows:

S_max is the maximum allowed sequence length (number of RNN iterations)E specifies the embedding length (unless ::kSKIP is set, in which case it should match getHiddenSize()).By default, all sequences in the input are assumed to be size maxSeqLen. To provide explicit sequence lengths for each input sequence in the batch, use IRNNv2Layer::setSequenceLengths().

The RNN layer outputs up to three tensors.

The first output tensor is the output of the final RNN layer across all timesteps, with dimensions {N1, ..., Np, S_max, H}:

N1..Np are the index dimensions specified by the input tensorS_max is the maximum allowed sequence length (number of RNN iterations)H is an output hidden state (equal to getHiddenSize() or 2x getHiddenSize())The second tensor is the final hidden state of the RNN across all layers, and if the RNN is an LSTM (i.e. getOperation() is ::kLSTM), then the third tensor is the final cell state of the RNN across all layers. Both the second and third output tensors have dimensions {N1, ..., Np, L, H}:

N1..Np are the index dimensions specified by the input tensorL is the number of layers in the RNN, equal to getLayerCount() if getDirection is ::kUNIDIRECTION, and 2x getLayerCount() if getDirection is ::kBIDIRECTION. In the bi-directional case, layer l's final forward hidden state is stored in L = 2*l, and final backward hidden state is stored in L= 2*l + 1.H is the hidden state for each layer, equal to getHiddenSize().| nvinfer1::INetworkDefinition::__attribute__ | ( | (deprecated) | ) | const |

Add a plugin layer to the network using an IPluginExt interface.

| inputs | The input tensors to the layer. |

| nbInputs | The number of input tensors. |

| plugin | The layer plugin. |

|

pure virtual |

Add an identity layer.

| input | The input tensor to the layer. |

|

pure virtual |

remove a tensor from the network definition.

| tensor | the tensor to remove |

It is illegal to remove a tensor that is the input or output of a layer. if this method is called with such a tensor, a warning will be emitted on the log and the call will be ignored. Its intended use is to remove detached tensors after e.g. concatenating two networks with Layer::setInput().

|

pure virtual |

unmark a tensor as a network output.

| tensor | The tensor to unmark as an output tensor. |

see markOutput()

|

pure virtual |

Add a plugin layer to the network using the IPluginV2 interface.

| inputs | The input tensors to the layer. |

| nbInputs | The number of input tensors. |

| plugin | The layer plugin. |

|

pure virtual |

Add a slice layer to the network.

| input | The input tensor to the layer. |

| start | The start offset |

| size | The output dimension |

| stride | The slicing stride |

Positive, negative, zero stride values, and combinations of them in different dimensions are allowed.

|

pure virtual |

Sets the name of the network.

| name | The name to assign to this network. |

Set the name of the network so that it can be associated with a built engine. The name must be a zero delimited C-style string of length no greater than 128 characters. TensorRT makes no use of this string except storing it as part of the engine so that it may be retrieved at runtime. A name unique to the builder will be generated by default.

This method copies the name string.

|

pure virtual |

Returns the name associated with the network.

The memory pointed to by getName() is owned by the INetworkDefinition object.

|

pure virtual |

Add a shape layer to the network.

| input | The input tensor to the layer. |

|

pure virtual |

Query whether the network was created with an implicit batch dimension.

This is a network-wide property. Either all tensors in the network have an implicit batch dimension or none of them do.

hasImplicitBatchDimension() is true if and only if this INetworkDefinition was created with createNetwork() or createNetworkV2() without NetworkDefinitionCreationFlag::kEXPLICIT_BATCH flag.

|

pure virtual |

Enable tensor's value to be computed by IExecutionContext::getShapeBinding.

The tensor must be of type DataType::kINT32 and have no more than one dimension.

|

pure virtual |

Undo markOutputForShapes.

|

pure virtualnoexcept |

Add a parametric ReLU layer to the network.

| input | The input tensor to the layer. |

| slope | The slope tensor to the layer. This tensor should be unidirectionally broadcastable to the input tensor. |

|

pure virtual |

Add a multi-dimension convolution layer to the network.

| input | The input tensor to the convolution. |

| nbOutputMaps | The number of output feature maps for the convolution. |

| kernelSize | The multi-dimensions of the convolution kernel. |

| kernelWeights | The kernel weights for the convolution. |

| biasWeights | The optional bias weights for the convolution. |

|

pure virtual |

Add a multi-dimension pooling layer to the network.

| input | The input tensor to the layer. |

| type | The type of pooling to apply. |

| windowSize | The size of the pooling window. |

|

pure virtual |

Add a multi-dimension deconvolution layer to the network.

| input | The input tensor to the layer. |

| nbOutputMaps | The number of output feature maps. |

| kernelSize | The multi-dimensions of the deconvolution kernel. |

| kernelWeights | The kernel weights for the deconvolution. |

| biasWeights | The optional bias weights for the deconvolution. |

|

pure virtual |

Add a multi-dimension scale layer to the network.

| input | The input tensor to the layer. |

| mode | The scaling mode. |

| shift | The shift value. |

| scale | The scale value. |

| power | The power value. |

| channelAxis | The channel axis. |

If the weights are available, then the size of weights are dependent on the ScaleMode. For ::kUNIFORM, the number of weights equals 1. For ::kCHANNEL, the number of weights equals the channel dimension. For ::kELEMENTWISE, the number of weights equals the product of all input dimensions at channelAxis and beyond.

For example, if the inputs dimensions are [A,B,C,D,E,F], and channelAxis=2: For ::kUNIFORM, the number of weights is equal to 1. For ::kCHANNEL, the number of weights is C. For ::kELEMENTWISE, the number of weights is C*D*E*F.

|

pure virtual |

Add a resize layer to the network.

| input | The input tensor to the layer. |

|

pure virtual |

True if network is an explicit precision network.

hasExplicitPrecision() is true if and only if this INetworkDefinition was created with createNetworkV2() with NetworkDefinitionCreationFlag::kEXPLICIT_PRECISION set.

|

pure virtualnoexcept |

Add a loop to the network.

An ILoop provides a way to specify a recurrent subgraph.

|

pure virtual |

Add a select layer to the network.

| condition | The condition tensor to the layer. |

| thenInput | The "then" input tensor to the layer. |

| elseInput | The "else" input tensor to the layer. |

|

pure virtualnoexcept |

Add a fill layer to the network.

| dimensions | The output tensor dimensions. |

| op | The fill operation that the layer applies. |

|

pure virtual |

Add a padding layer to the network.

Only 2D padding is currently supported.

| input | The input tensor to the layer. |

| prePadding | The padding to apply to the start of the tensor. |

| postPadding | The padding to apply to the end of the tensor. |

| int32_t nvinfer1::INetworkDefinition::nbOutputMaps |

| int32_t DimsHW nvinfer1::INetworkDefinition::kernelSize |

| PoolingType nvinfer1::INetworkDefinition::type |

| PoolingType DimsHW nvinfer1::INetworkDefinition::windowSize = 0 |

| int32_t nvinfer1::INetworkDefinition::layerCount |

| int32_t std::size_t nvinfer1::INetworkDefinition::hiddenSize |

| int32_t std::size_t int32_t nvinfer1::INetworkDefinition::maxSeqLen |

| int32_t std::size_t int32_t RNNOperation nvinfer1::INetworkDefinition::op |

| int32_t std::size_t int32_t RNNOperation RNNInputMode nvinfer1::INetworkDefinition::mode |

| int32_t std::size_t int32_t RNNOperation RNNInputMode RNNDirection nvinfer1::INetworkDefinition::dir |

| int32_t std::size_t int32_t RNNOperation RNNInputMode RNNDirection Weights nvinfer1::INetworkDefinition::weights |

| int32_t std::size_t int32_t RNNOperation RNNInputMode RNNDirection Weights Weights nvinfer1::INetworkDefinition::bias = 0 |

| int32_t nvinfer1::INetworkDefinition::nbInputs |

| int32_t IPlugin& nvinfer1::INetworkDefinition::plugin = 0 |

| DimsHW nvinfer1::INetworkDefinition::prePadding |

| bool nvinfer1::INetworkDefinition::transpose0 |

| bool ITensor& nvinfer1::INetworkDefinition::input1 |

| bool ITensor bool nvinfer1::INetworkDefinition::transpose1 = 0 |

| int32_t int32_t nvinfer1::INetworkDefinition::hiddenSize |

| int32_t int32_t int32_t nvinfer1::INetworkDefinition::maxSeqLen |

| int32_t int32_t int32_t RNNOperation nvinfer1::INetworkDefinition::op = 0 |

| int32_t IPluginExt& nvinfer1::INetworkDefinition::plugin = 0 |