|

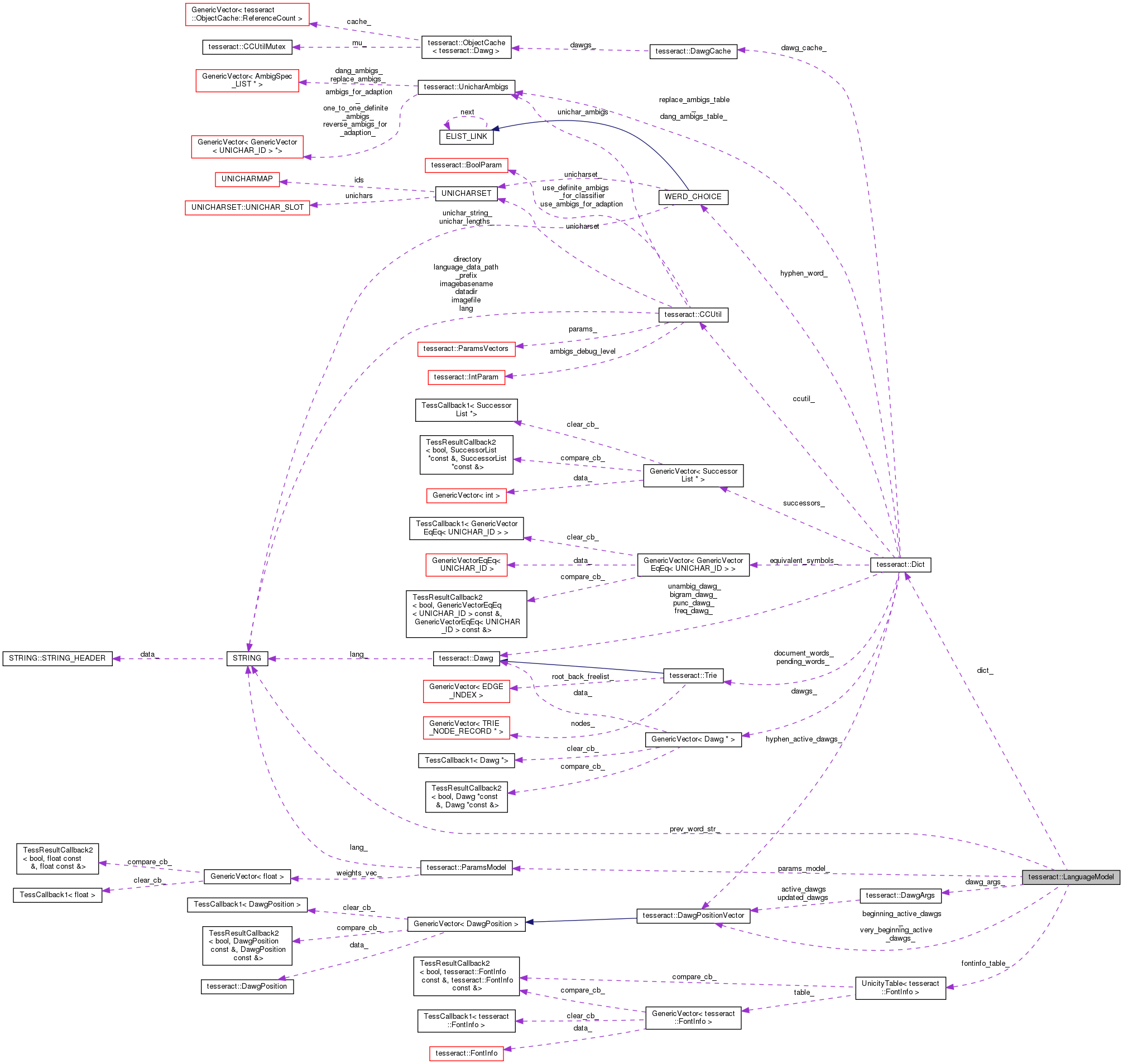

| | LanguageModel (const UnicityTable< FontInfo > *fontinfo_table, Dict *dict) |

| |

| | ~LanguageModel () |

| |

| void | InitForWord (const WERD_CHOICE *prev_word, bool fixed_pitch, float max_char_wh_ratio, float rating_cert_scale) |

| |

| bool | UpdateState (bool just_classified, int curr_col, int curr_row, BLOB_CHOICE_LIST *curr_list, LanguageModelState *parent_node, LMPainPoints *pain_points, WERD_RES *word_res, BestChoiceBundle *best_choice_bundle, BlamerBundle *blamer_bundle) |

| |

| bool | AcceptableChoiceFound () |

| |

| void | SetAcceptableChoiceFound (bool val) |

| |

| ParamsModel & | getParamsModel () |

| |

| | INT_VAR_H (language_model_debug_level, 0, "Language model debug level") |

| |

| | BOOL_VAR_H (language_model_ngram_on, false, "Turn on/off the use of character ngram model") |

| |

| | INT_VAR_H (language_model_ngram_order, 8, "Maximum order of the character ngram model") |

| |

| | INT_VAR_H (language_model_viterbi_list_max_num_prunable, 10, "Maximum number of prunable (those for which PrunablePath() is" " true) entries in each viterbi list recorded in BLOB_CHOICEs") |

| |

| | INT_VAR_H (language_model_viterbi_list_max_size, 500, "Maximum size of viterbi lists recorded in BLOB_CHOICEs") |

| |

| | double_VAR_H (language_model_ngram_small_prob, 0.000001, "To avoid overly small denominators use this as the floor" " of the probability returned by the ngram model") |

| |

| | double_VAR_H (language_model_ngram_nonmatch_score, -40.0, "Average classifier score of a non-matching unichar") |

| |

| | BOOL_VAR_H (language_model_ngram_use_only_first_uft8_step, false, "Use only the first UTF8 step of the given string" " when computing log probabilities") |

| |

| | double_VAR_H (language_model_ngram_scale_factor, 0.03, "Strength of the character ngram model relative to the" " character classifier ") |

| |

| | double_VAR_H (language_model_ngram_rating_factor, 16.0, "Factor to bring log-probs into the same range as ratings" " when multiplied by outline length ") |

| |

| | BOOL_VAR_H (language_model_ngram_space_delimited_language, true, "Words are delimited by space") |

| |

| | INT_VAR_H (language_model_min_compound_length, 3, "Minimum length of compound words") |

| |

| | double_VAR_H (language_model_penalty_non_freq_dict_word, 0.1, "Penalty for words not in the frequent word dictionary") |

| |

| | double_VAR_H (language_model_penalty_non_dict_word, 0.15, "Penalty for non-dictionary words") |

| |

| | double_VAR_H (language_model_penalty_punc, 0.2, "Penalty for inconsistent punctuation") |

| |

| | double_VAR_H (language_model_penalty_case, 0.1, "Penalty for inconsistent case") |

| |

| | double_VAR_H (language_model_penalty_script, 0.5, "Penalty for inconsistent script") |

| |

| | double_VAR_H (language_model_penalty_chartype, 0.3, "Penalty for inconsistent character type") |

| |

| | double_VAR_H (language_model_penalty_font, 0.00, "Penalty for inconsistent font") |

| |

| | double_VAR_H (language_model_penalty_spacing, 0.05, "Penalty for inconsistent spacing") |

| |

| | double_VAR_H (language_model_penalty_increment, 0.01, "Penalty increment") |

| |

| | INT_VAR_H (wordrec_display_segmentations, 0, "Display Segmentations") |

| |

| | BOOL_VAR_H (language_model_use_sigmoidal_certainty, false, "Use sigmoidal score for certainty") |

| |

|

| float | CertaintyScore (float cert) |

| |

| float | ComputeAdjustment (int num_problems, float penalty) |

| |

| float | ComputeConsistencyAdjustment (const LanguageModelDawgInfo *dawg_info, const LMConsistencyInfo &consistency_info) |

| |

| float | ComputeAdjustedPathCost (ViterbiStateEntry *vse) |

| |

| bool | GetTopLowerUpperDigit (BLOB_CHOICE_LIST *curr_list, BLOB_CHOICE **first_lower, BLOB_CHOICE **first_upper, BLOB_CHOICE **first_digit) const |

| |

| int | SetTopParentLowerUpperDigit (LanguageModelState *parent_node) const |

| |

| ViterbiStateEntry * | GetNextParentVSE (bool just_classified, bool mixed_alnum, const BLOB_CHOICE *bc, LanguageModelFlagsType blob_choice_flags, const UNICHARSET &unicharset, WERD_RES *word_res, ViterbiStateEntry_IT *vse_it, LanguageModelFlagsType *top_choice_flags) const |

| |

| bool | AddViterbiStateEntry (LanguageModelFlagsType top_choice_flags, float denom, bool word_end, int curr_col, int curr_row, BLOB_CHOICE *b, LanguageModelState *curr_state, ViterbiStateEntry *parent_vse, LMPainPoints *pain_points, WERD_RES *word_res, BestChoiceBundle *best_choice_bundle, BlamerBundle *blamer_bundle) |

| |

| void | GenerateTopChoiceInfo (ViterbiStateEntry *new_vse, const ViterbiStateEntry *parent_vse, LanguageModelState *lms) |

| |

| LanguageModelDawgInfo * | GenerateDawgInfo (bool word_end, int curr_col, int curr_row, const BLOB_CHOICE &b, const ViterbiStateEntry *parent_vse) |

| |

| LanguageModelNgramInfo * | GenerateNgramInfo (const char *unichar, float certainty, float denom, int curr_col, int curr_row, float outline_length, const ViterbiStateEntry *parent_vse) |

| |

| float | ComputeNgramCost (const char *unichar, float certainty, float denom, const char *context, int *unichar_step_len, bool *found_small_prob, float *ngram_prob) |

| |

| float | ComputeDenom (BLOB_CHOICE_LIST *curr_list) |

| |

| void | FillConsistencyInfo (int curr_col, bool word_end, BLOB_CHOICE *b, ViterbiStateEntry *parent_vse, WERD_RES *word_res, LMConsistencyInfo *consistency_info) |

| |

| void | UpdateBestChoice (ViterbiStateEntry *vse, LMPainPoints *pain_points, WERD_RES *word_res, BestChoiceBundle *best_choice_bundle, BlamerBundle *blamer_bundle) |

| |

| WERD_CHOICE * | ConstructWord (ViterbiStateEntry *vse, WERD_RES *word_res, DANGERR *fixpt, BlamerBundle *blamer_bundle, bool *truth_path) |

| |

| void | ComputeAssociateStats (int col, int row, float max_char_wh_ratio, ViterbiStateEntry *parent_vse, WERD_RES *word_res, AssociateStats *associate_stats) |

| |

| bool | PrunablePath (const ViterbiStateEntry &vse) |

| |

| bool | AcceptablePath (const ViterbiStateEntry &vse) |

| |

UpdateState has the job of combining the ViterbiStateEntry lists on each of the choices on parent_list with each of the blob choices in curr_list, making a new ViterbiStateEntry for each sensible path.

This could be a huge set of combinations, creating a lot of work only to be truncated by some beam limit, but only certain kinds of paths will continue at the next step:

- paths that are liked by the language model: either a DAWG or the n-gram model, where active.

- paths that represent some kind of top choice. The old permuter permuted the top raw classifier score, the top upper case word and the top lower- case word. UpdateState now concentrates its top-choice paths on top lower-case, top upper-case (or caseless alpha), and top digit sequence, with allowance for continuation of these paths through blobs where such a character does not appear in the choices list.

GetNextParentVSE enforces some of these models to minimize the number of calls to AddViterbiStateEntry, even prior to looking at the language model. Thus an n-blob sequence of [l1I] will produce 3n calls to AddViterbiStateEntry instead of 3^n.

Of course it isn't quite that simple as Title Case is handled by allowing lower case to continue an upper case initial, but it has to be detected in the combiner so it knows which upper case letters are initial alphas.